A recent research blog conducted by CyberArk Labs revealed how ChatGPT could be used to create polymorphic malware – a type of malicious code that is highly advanced and capable of evading security products.

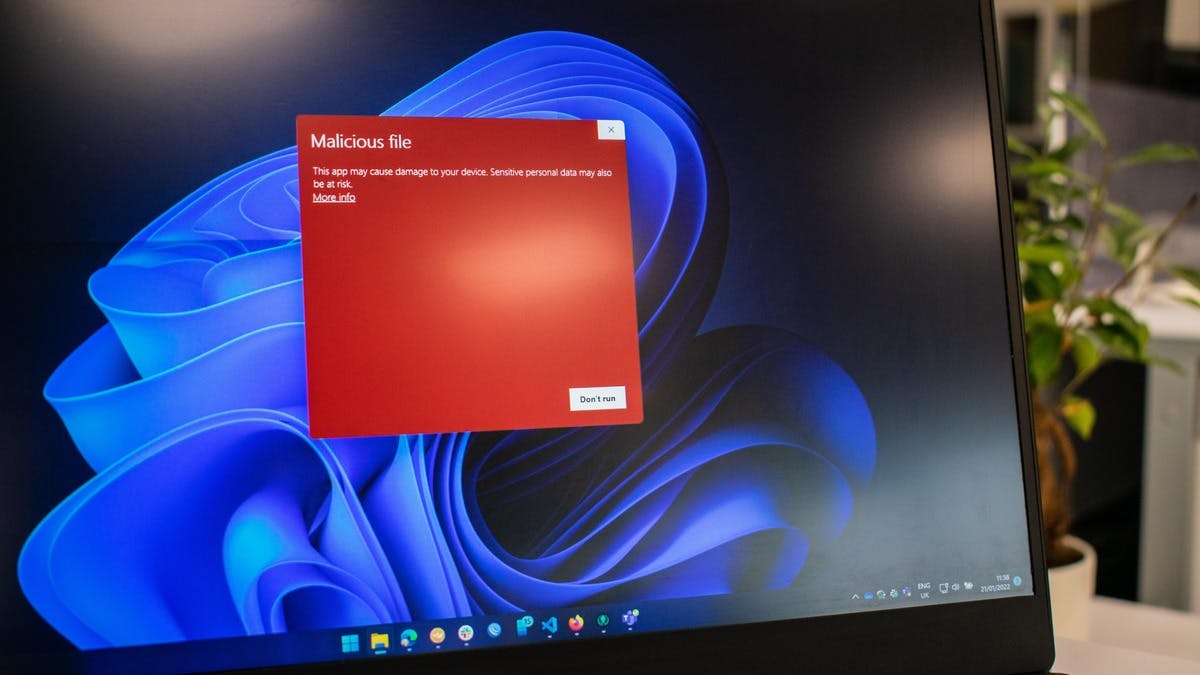

This type of malware is known for its ability to change its code, or “morph,” in order to evade detection by security software.

The researchers at CyberArk Labs were able to bypass ChatGPT’s content filters, which were implemented to prevent the generation of malicious code, by continuously querying the chatbot. This allowed them to receive a unique, functional, and validated piece of code each time, resulting in polymorphic malware that does not exhibit malicious behaviour while stored on disk and often does not contain suspicious logic while in memory.

“Polymorphic malware is complicated for security products to deal with because you can’t really detect them. Besides, they usually don’t leave any trace on the file system as their malicious code is only handled while in memory. Additionally, if one views the executable\elf, it probably looks benign,” CyberArk Labs noted.

This level of modularity and adaptability makes it highly evasive to security products that rely on signature-based detection. This means that traditional methods of detection, such as antivirus software, may not be able to detect or prevent such malware. As a result, it makes the mitigation of this type of malware more difficult and time-consuming.

The chatbot’s natural language processing capabilities and its ability to generate code make it a valuable tool for less skilled cyber threat actors, who may need more technical expertise to write their own malware.

According to a recent blog post from security firm Check Point Research, the release of ChatGPT, a large language model developed by OpenAI, has raised concerns about its potential use by cyber criminals.

The researchers suggest that the ease with which ChatGPT can generate code may make it a valuable tool for less skilled cyber threat actors to launch cyberattacks. It is still uncertain whether ChatGPT will become a popular tool among the Dark Web community, but the researchers noted that the cybercriminal community has already shown interest in using the technology for malicious purposes.

It is important to note that the use of ChatGPT or any other AI technology for malicious purposes is not a new phenomenon. Cybercriminals have been using AI to automate various aspects of their operations for some time now. However, the release of ChatGPT has brought attention to the potential dangers of AI-generated code, as it allows even less skilled threat actors to launch sophisticated attacks.

Last month, a thread named “ChatGPT – Benefits of Malware” appeared on a popular underground hacking forum. The publisher of the thread revealed that he was testing ChatGPT to recreate techniques described in research publications about common malware.

He shared the code of a Python-based stealer that searches for common file types, such as documents and images, copy them to a random folder inside the Temp folder, ZIPs them, and uploads them to an FTP server. This type of malware is commonly known as a “stealer,” which is used to steal sensitive information from the infected computer.

“Previously, people published that one can query ChatGPT for malicious purposes of obtaining damaging code. We showcase how we bypassed to content filter and how we can create malware that, in runtime queries, enables ChatGPT to load malicious code,” CyberArk Labs said.

“The malware doesn’t contain any malicious code on the disk as it receives the code from ChatGPT, then validates it (checks if the code is correct), and then executes it without leaving a trace. Besides that, we can ask ChatGPT to “mutate” our code.

Furthermore, it is also possible that the chatbot can be used to generate code that can evade detection by the security software, making it even more dangerous.

“The lack of detection of advanced malware that security products are not aware of. At the moment, there is no public record of such use, as we are the first to publish it. As of now, we aren’t providing the public code for the full malware.”

“Keep in mind that the information and ideas discussed in this post should be used with caution, as using ChatGPT’s API to create malicious code is a serious concern and should not be taken lightly.”

In addition to the stealer, the hacker also created a simple Java snippet using ChatGPT that can be modified to run any program, including common malware families such as Ransomware, Trojans, and keyloggers. This demonstrates how ChatGPT’s ability to generate code can be used to create malicious software with minimal effort.

This post intends to raise awareness about the potential risks and encourage further research on this topic.

More here.

Keep up to date with our stories on LinkedIn, Twitter, Facebook and Instagram.